Abstract

Nowadays, autonomous cars can drive smoothly in ordinary cases, and it is widely recognized that realistic sensor simulation will play a critical role in solving remaining corner cases by simulating them. To this end, we propose an autonomous driving simulator based upon neural radiance fields (NeRFs). Compared with existing works, ours has three notable features:

(1) Instance-aware. Our simulator models the foreground instances and background environments separately with independent networks so that the static (e.g., size and appearance) and dynamic (e.g., trajectory) properties of instances can be controlled separately.

(2) Modular. Our simulator allows flexible switching between different modern NeRF-related backbones, sampling strategies, input modalities, etc. We expect this modular design to boost academic progress and industrial deployment of NeRF-based autonomous driving simulation.

(3) Realistic. Our simulator set new state-of-the-art photo-realism results given the best module selection. Our simulator will be open-sourced while most of our counterparts are not.

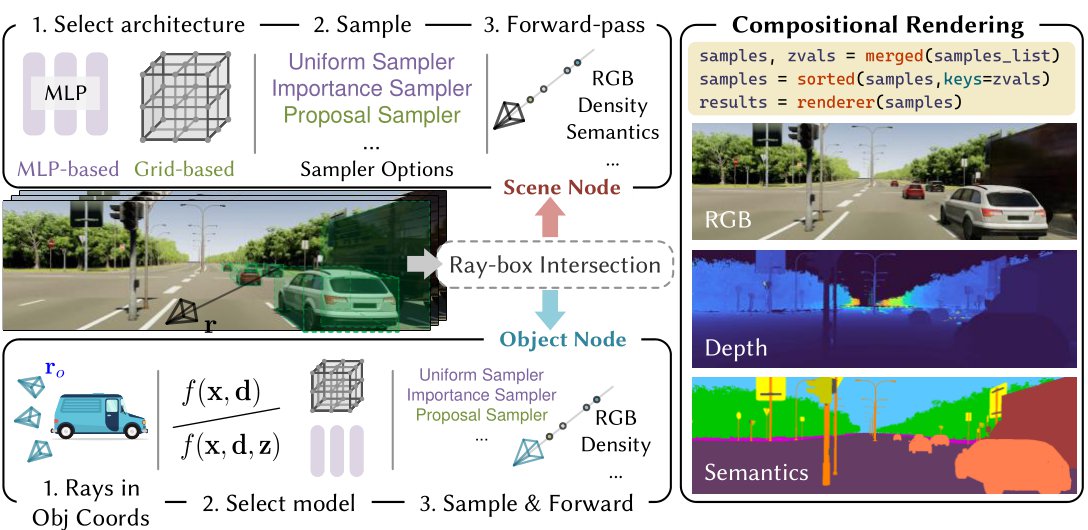

Our method pipeline: Left: We first calculate the ray-box intersection of the queried ray and all visible instance bounding boxes. For the background node, we directly use the selected scene representation model and the chosen sampler to infer point-wise properties, as in conventional NeRFs. For the foreground nodes, the ray is first transformed into the instance frame as before being processed through foreground node representations. Right: All the samples are composed and rendered into RGB images, depth maps, and semantics.

Results

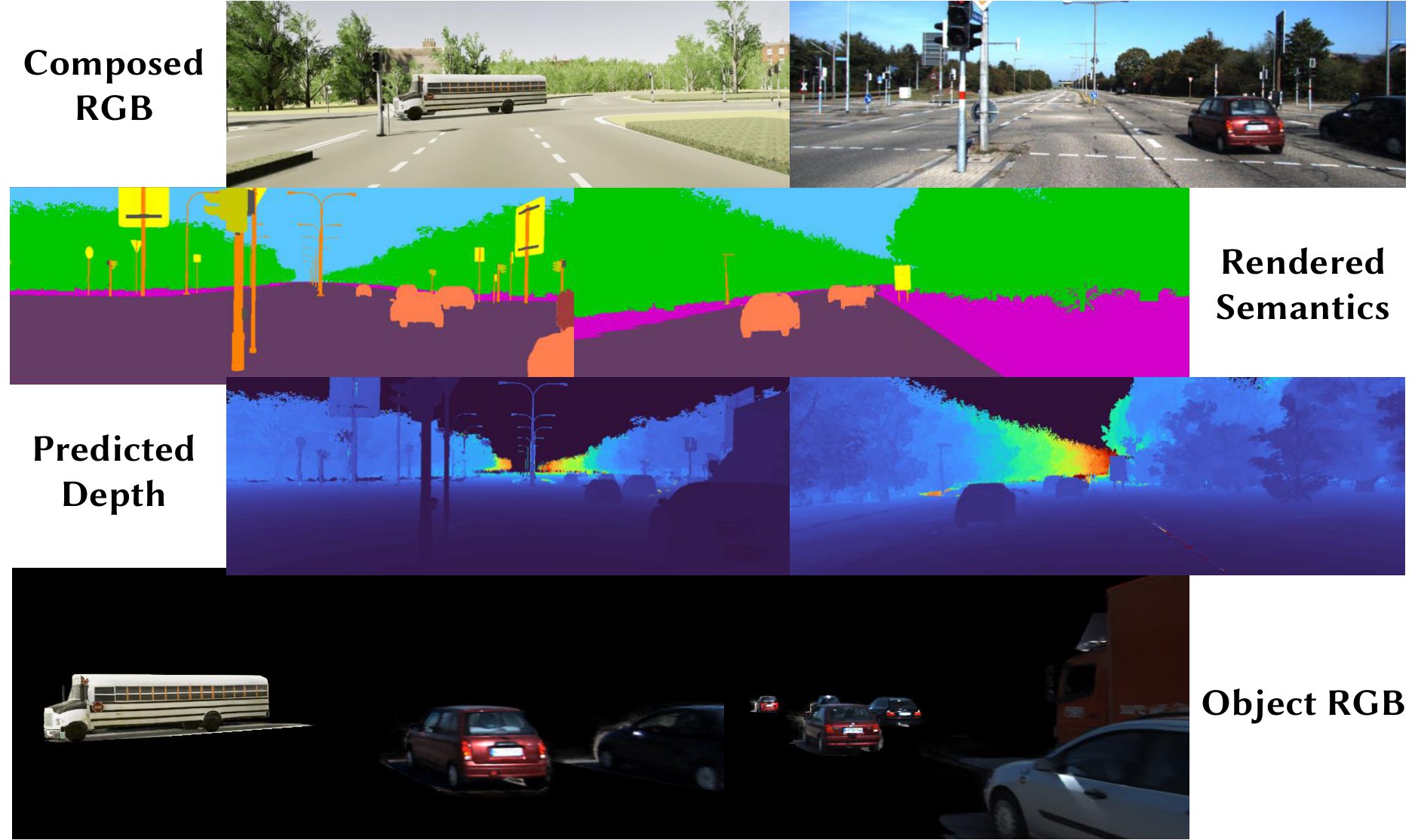

Vitural-KITTI-2

A reconstruction of the Virtual-KITTI-2 dataset (Scene02).

KITTI-MOT

A reconstruction of the KITTI dataset (Sequence 0006).

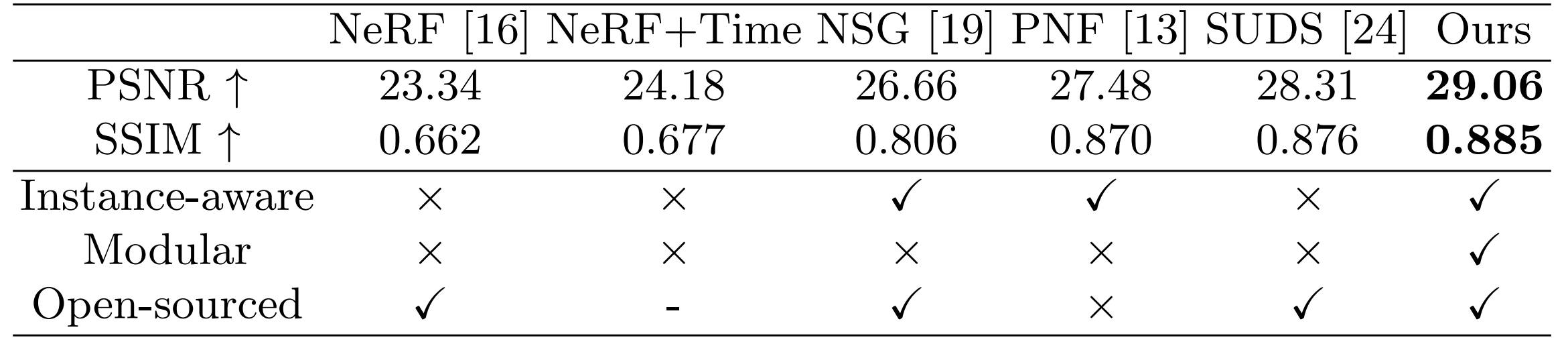

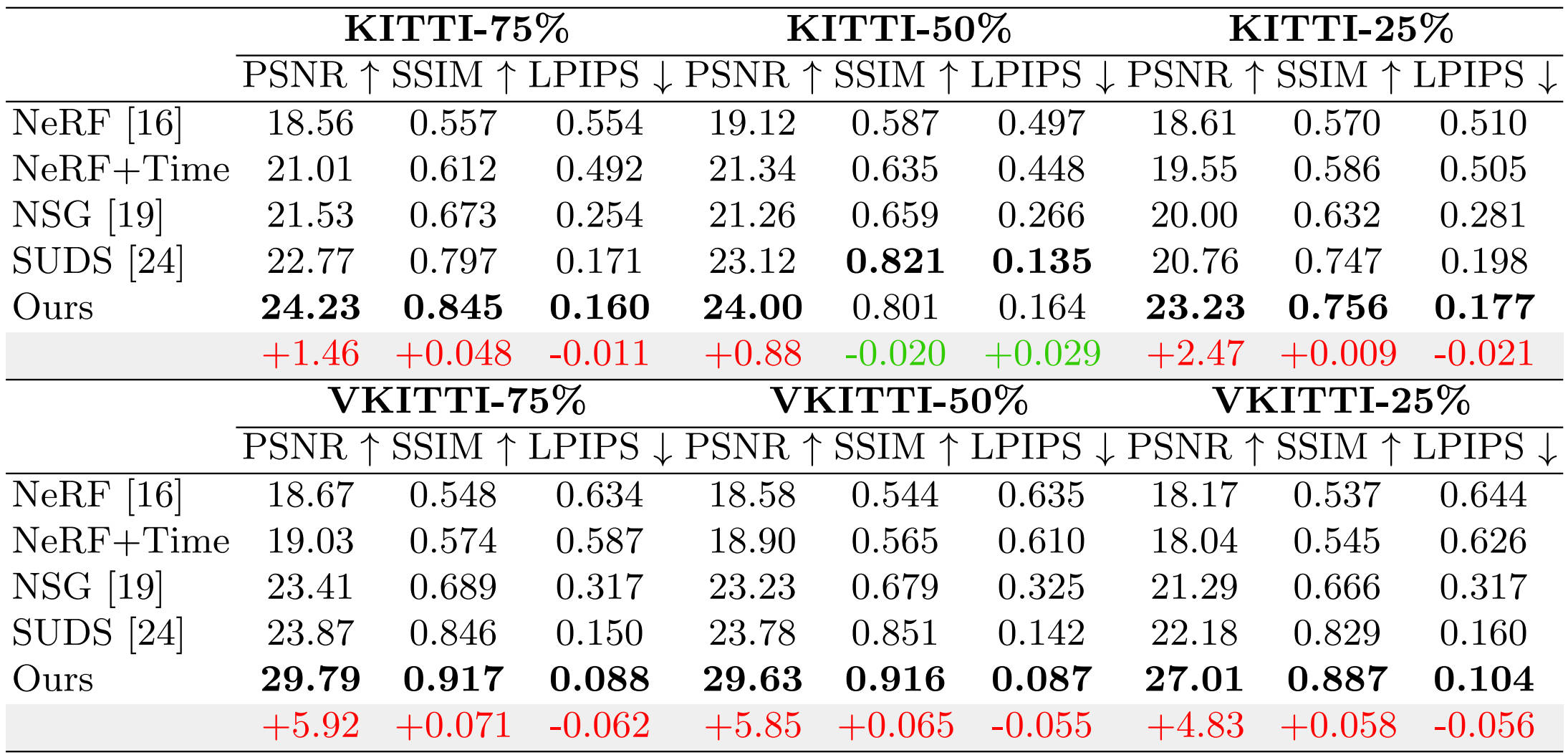

Qunatitative results on image reconstruction task (KITTI) & Comparisons on the settings with baseline methods.

Editing

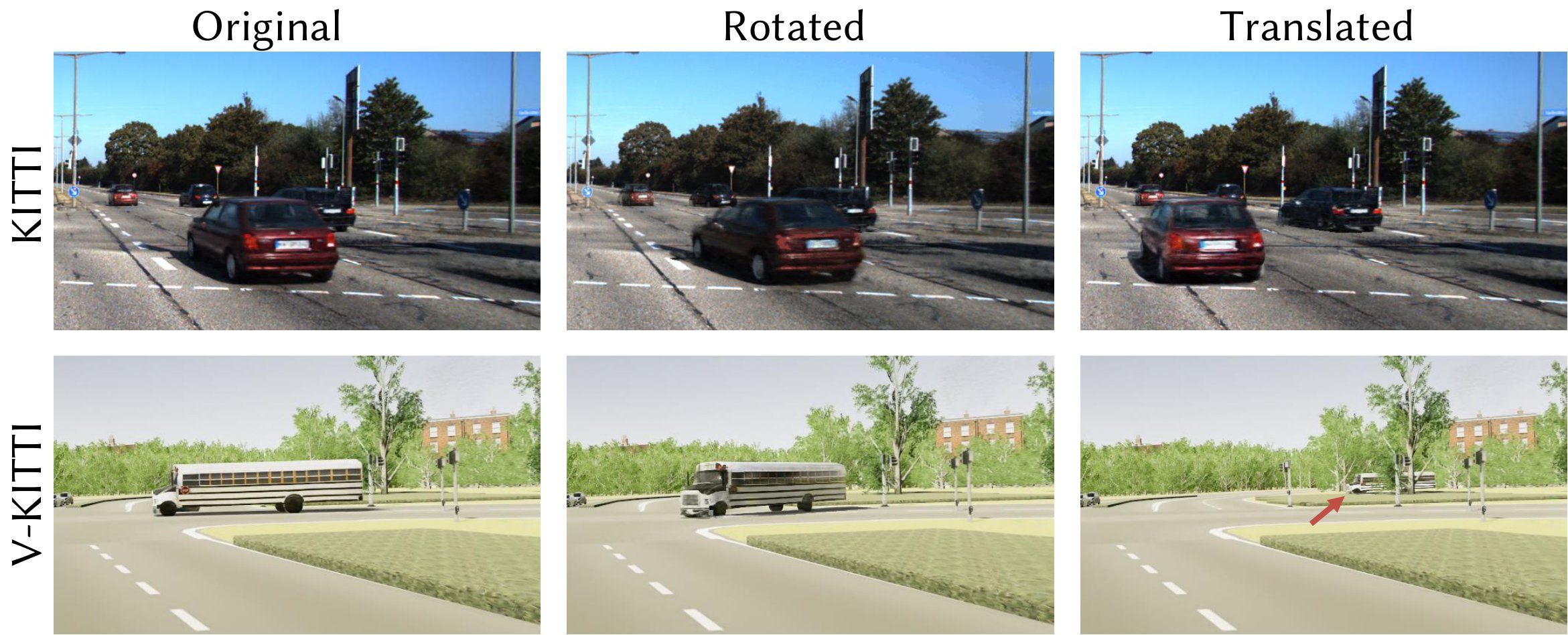

We first show some editing clips on KITTI/V-KITTI.

Rotation & Translation

You can also try playing with the widget in below that allows you to edit the scene by changing the pose of the camera.

Start Frame

End Frame

Waymo Open Dataset

We also provide an extra editing result on the Waymo-open dataset.

Experiment results

Gallery of different rendering channels.

Quantitative results on novel view synthesis.

Related Links

There's a lot of excellent work that was introduced around the same time as ours.

SUDS: Scalable Urban Dynamic Scenes factorizes the scene into three separate hash tables to efficiently encode static, dynamic, and far-field parts of the scene.

UniSim creates manipulable digital twins from recorded sensor data.

Some excellent priors works, such as Neural Scene Graph, Panoptic Neural Fields, etc.

NeRF and Beyond: A friendly research community about NeRF.

BibTeX

@article{wu2023mars,

author = {Wu, Zirui and Liu, Tianyu and Luo, Liyi and Zhong, Zhide and Chen, Jianteng and Xiao, Hongmin and Hou, Chao and Lou, Haozhe and Chen, Yuantao and Yang, Runyi and Huang, Yuxin and Ye, Xiaoyu and Yan, Zike and Shi, Yongliang and Liao, Yiyi and Zhao, Hao},

title = {MARS: An Instance-aware, Modular and Realistic Simulator for Autonomous Driving},

journal = {CICAI},

year = {2023},

}